Crafting Realistic Visuals for XR

Extended Reality (XR) keeps blurring the lines between the real and virtual with the help of realistic graphics. However, crafting lifelike visuals is a complex process that requires proper hardware, knowledge of rendering techniques, and careful attention to detail in design and materials. This article will explore the elements contributing to making realistic XR visuals, including resolution, scale, materials, lighting, and optimization techniques. By applying these insights, practical tips, and real-world examples, 3D enthusiasts can boost the user experience of their XR applications to the next level.

Device Specifications

When talking about Virtual Reality (VR) and Mixed Reality (MR), resolution and display technology play a major part in achieving lifelike visuals. The quality of the user’s visual experience relies heavily on the pixel density of the display. Higher resolutions help reduce the undesirable “screen door effect”, a common issue where users perceive a mesh-like pattern overlaying the image, detracting from the immersive experience.

To further refine visual fidelity, leading-edge devices incorporate sophisticated rendering techniques. Foveated rendering is one such innovation. This technology smartly adjusts the rendering focus, concentrating high-resolution graphics where the user’s gaze is directed, thus optimizing performance and maintaining impeccable visual quality. Tailoring to individual preferences, users can choose from a diverse range of devices, each equipped with superior screens and hardware. This variety empowers them to select the option that best aligns with their specific needs, enhancing their virtual reality experience. In the Mixed Reality (MR) domain, cameras play a central role. Optical and infrared cameras capture the real world, allowing devices to integrate digital content into the user’s surroundings seamlessly. With the development of camera technology, MR experiences will offer improved depth perception, object recognition, and better environmental understanding. Consequently, such an immersive experience will be more realistic.

The Scale of Virtual Worlds

One aspect often overlooked in creating realistic visuals is the scale of 3D models. Accurate scale is paramount to achieving an authentic experience. In virtual environments, users interact with objects and spaces that need to mirror real-world proportions correspondingly. Misjudging the scale of 3D models can lead to disorientation and break the illusion of immersion. To avoid this, developers and designers must meticulously calibrate the size of virtual objects and environments to match human perception and the device camera angle. This approach ensures that users feel a genuine presence within the virtual spaces. When it comes to Virtual Reality (VR) applications, it is essential to carefully consider the composition since users can move freely within virtual spaces. The artist’s goal is to provide a coherent and enjoyable experience by striking the right balance between the composition’s small, medium, and large forms and preventing visual clutter.

Materials and Details

Materials are essential in animating Extended Reality (XR) environments, where high-quality textures act as the artist’s toolkit for generating realistic visuals. In order to seamlessly integrate virtual objects into XR landscapes, the artist needs to pay attention to surface details such as color distribution, reflections, and normal maps. Additionally, procedural generation techniques significantly enhance the depth and variety of XR environments, enabling the crafting of complex and diverse virtual worlds.

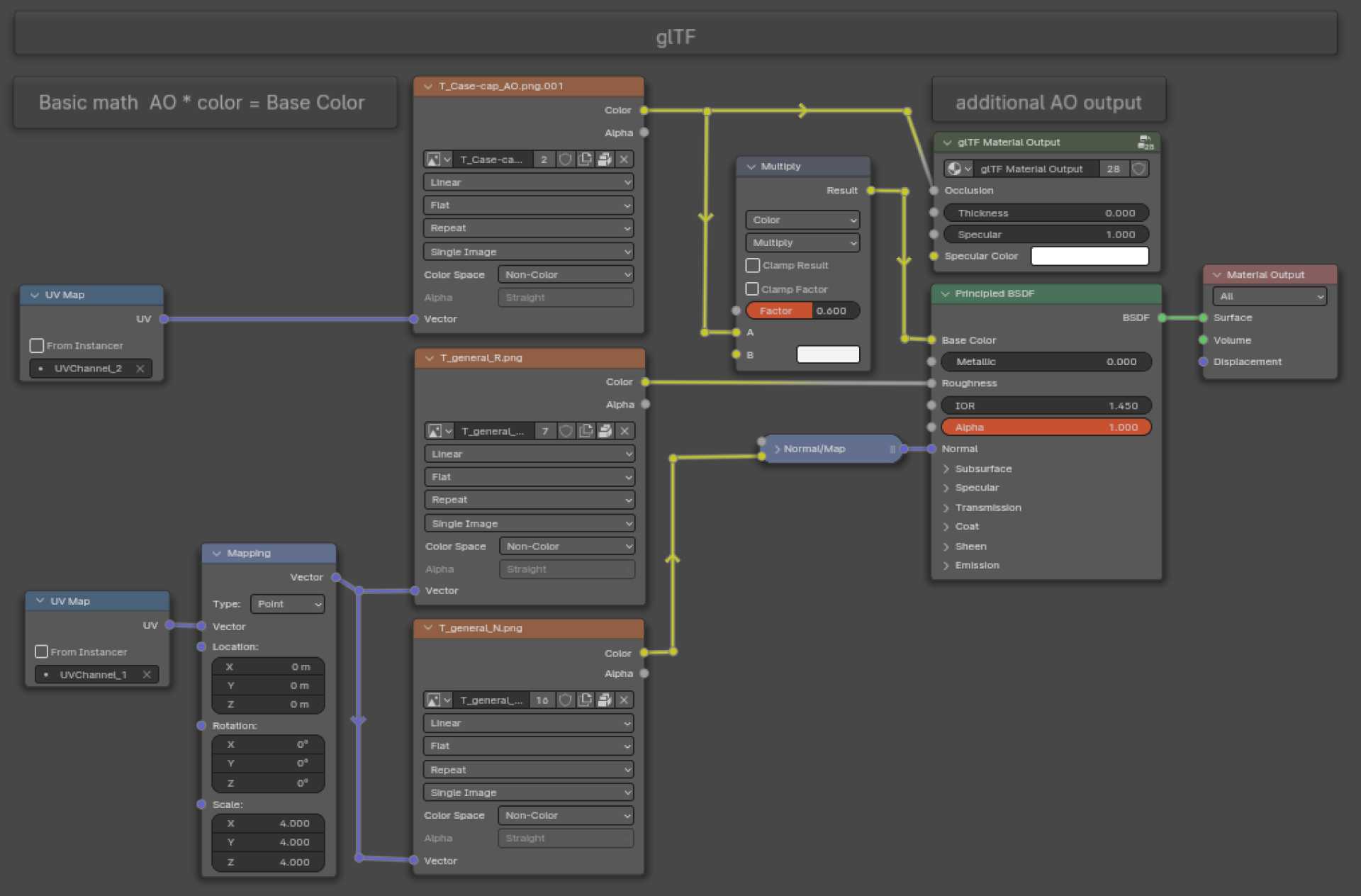

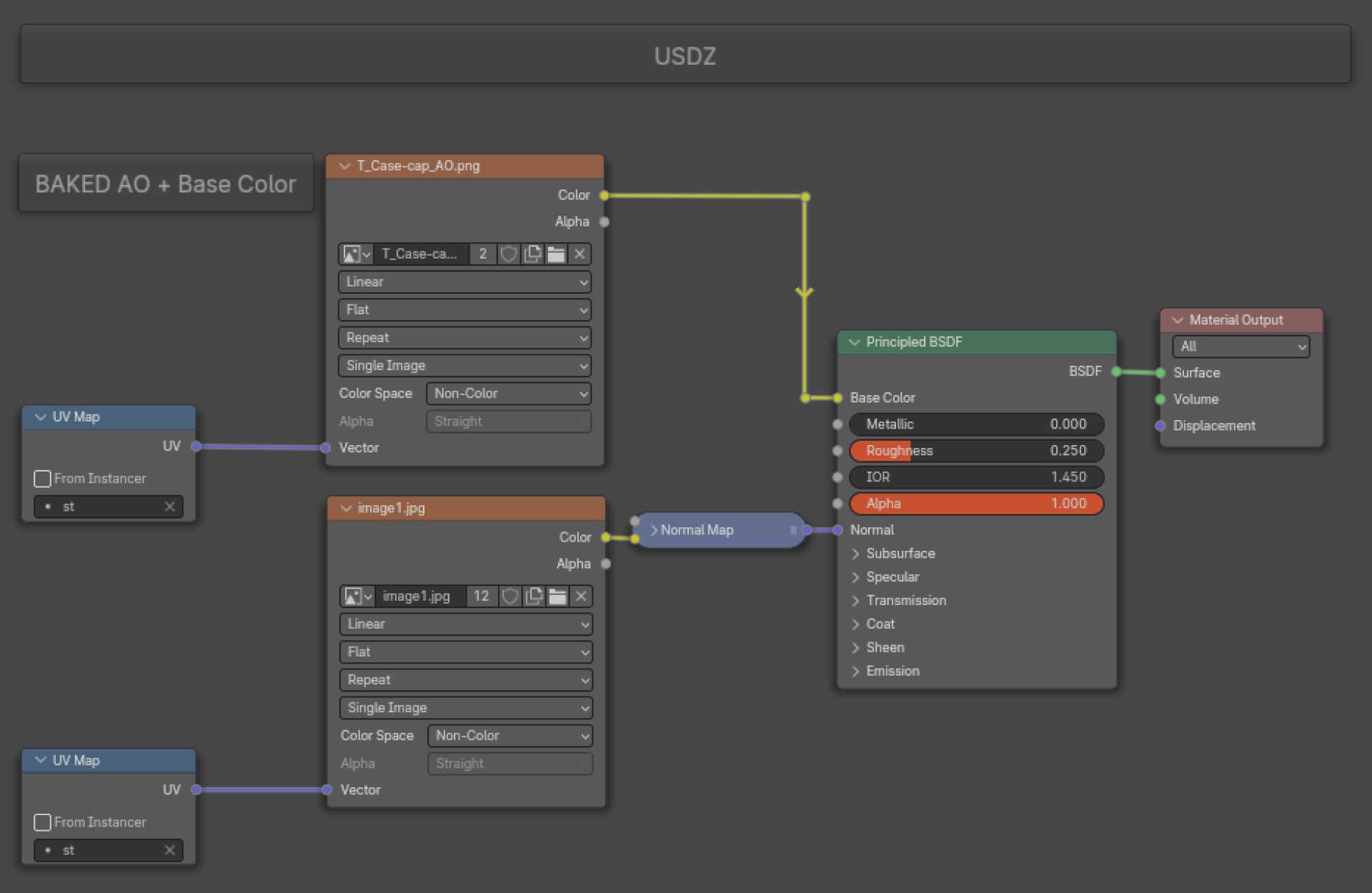

When developing Augmented Reality (AR) applications, focusing on the textures that can be applied in glTF — for Android AR and USDZ — for iOS AR formats is essential. The USDZ format requires additional effort to bake outputs and minimize texture count. Meanwhile, the glTF format shows impressive results when using material nodes, as shown in the images below. It’s important to note that glTF files generally have a smaller file size than USDZ files.

While working with 3D modeling tasks, you may require additional topology complexity to some meshes. This is necessary to achieve the desired level of surface detail. One effective way to accomplish this is by incorporating bevels at 90-degree angles and using weighted normals. This technique solves the aliasing issues that can occur when pixels move in steps at certain viewing angles. Using bevels helps eliminate irregular edges and improve reflections, while weighted normals ensure accurate alignment with normal maps, enhancing the overall visual fidelity of the 3D models. However, it is important to remember that normal maps, which are often used to add surface detail and depth in traditional 2D screen applications, do not provide the same experience in virtual reality (VR) environments. In VR, the perception of depth and detail is different due to the immersive nature of the experience. Therefore, when planning and designing VR content, transferring most of the large and medium details directly onto the mesh is essential.

Lighting

In Extended Reality (XR), achieving realistic visuals requires effective use of lighting, with shadows being one of the pillars of realism. Unfortunately, due to limited device capabilities, this endeavor presents performance challenges and requires greater screen expansion. As a practical solution to handle both realism and performance, we need to make a preliminary calculation of lighting.

One of the techniques is light baking inside the game engine. This is a technique that precomputes lighting calculations and stores them in special textures. Doing so reduces the computational load during runtime. This approach is particularly useful in VR and AR applications where real-time rendering is critical. Through light baking, developers can ensure the delivery of visually rich experiences with global illumination that are both smooth and responsive, effectively balancing visual fidelity with the operational constraints of XR technologies. Another method of incorporating lighting in 3D applications is by baking it directly onto base color maps. It can produce higher-quality lighting that can be utilized in various virtual environments. Mainly, it is used in AR or web applications. However, this technique may require significant resources, primarily due to the need for numerous large textures.

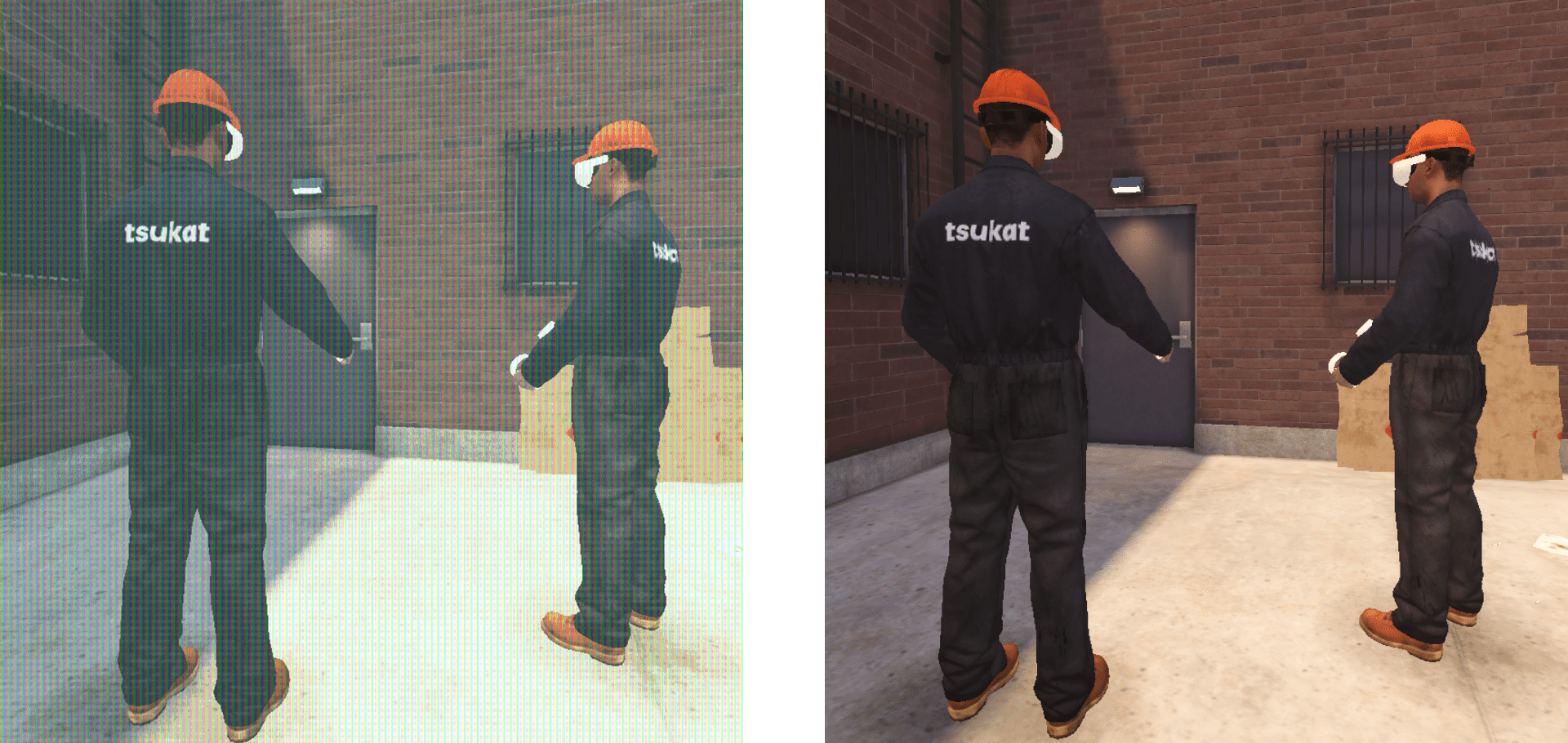

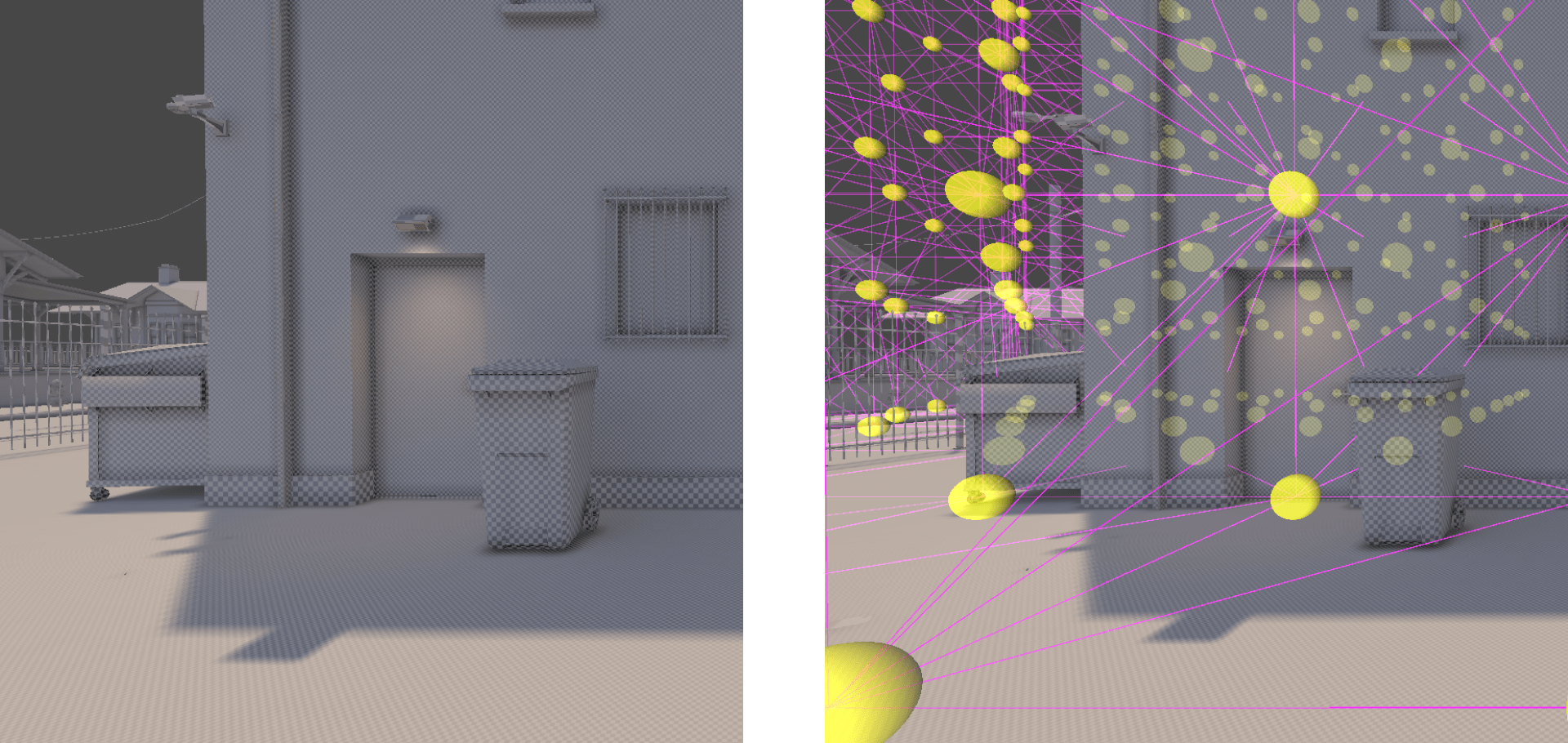

In the development of Virtual Reality (VR) applications, it’s recommended to use a lightmap baking process inside the game engine. Still, if our case presupposes that we will interact with the environment and see other people’s moves, it is better to use light probes. That is because lightmap creates static textures depicting how light interacts with surfaces under specific conditions. Although lightmaps result in higher-quality lighting, they do not change during the experience and do not interact with moving objects in the scene. While light probes allow users to observe how objects change color as they move between bright and dark areas. Additionally, they enhance the realism of the scene by depicting how the surface of movable objects interacts with the direct color of light.

Optimization Techniques

Similarly to light baking, other optimization techniques are important for creating realistic visuals and stable performance. One of them is a level-of-detail (LOD) system that dynamically adjusts the detail of 3D models based on the user’s proximity and optimizes resource usage. LOD is particularly beneficial in large virtual environments where rendering high-detail models at a distance may be unnecessary. As the user gets closer to an object, the LOD system seamlessly transitions to more detailed models, ensuring a balance between visual quality and computational efficiency. Occlusion culling is another technique that renders only visible objects and reduces the rendering workload. This technique is particularly effective in complex scenes where numerous objects may be present but are not simultaneously visible. By intelligently excluding occluded objects from rendering calculations, the approach contributes to a smoother and more responsive experience in Extended Reality (XR) applications. These optimization techniques collectively contribute to a seamless XR experience, enhancing performance without compromising visual quality.

Challenges and Future Directions

As Extended Reality (XR) technology continues evolving, new ways of creating these applications emerge. Among anticipated future developments is application streaming through cloud technologies. This approach streams software applications from remote servers to end-user devices over the internet, resulting in better graphics, smoother performance, and reduced reliance on local processing power and storage. Also, a new generation of WebGPU is on the way, allowing for next-gen web applications with advanced graphics solutions. With direct access to the Graphics Processing Unit (GPU), WebGPU facilitates improved rendering techniques like ray tracing, offering realistic lighting and reflections. Developers can leverage WebGPU for applications requiring intricate simulations, complex visualizations, or machine learning. Furthermore, its capabilities make it well-suited for crafting immersive web-based virtual and augmented reality experiences. Regarding hardware, the release of the latest Meta Quest and Vision Pro versions showed how the camera technology has enhanced. These devices force the creation of cutting-edge Mixed Reality (MR) applications and allow the seamless addition of virtual objects and shadows to real environments.

Another aspect is the potential of MR technologies to enhance virtual training. By integrating artificial intelligence (AI) networks, it will be possible to create fully-fledged virtual assistants. These assistants can boost virtual training by providing features like personalized guidance, real-time feedback, and adaptive learning experiences tailored to each user’s needs. For example, virtual assistants can analyze user performance data, identify areas for improvement, and offer targeted recommendations or exercises to help users achieve their learning objectives more effectively. The industry is already shifting towards leveraging AI technologies for virtual training purposes, and by doing so, these environments will become more personalized, adaptive, and effective.

Conclusion

Creating realistic visuals that seamlessly integrate the physical and virtual worlds requires meticulous attention to detail from artists. They must consider pixel density, scaling, material selection, and lighting. As we look ahead, the trajectory of Extended Reality (XR) points towards cloud-based application streaming and the infusion of artificial intelligence (AI) in Mixed Reality (MR). These innovations promise to reshape how we interact with digital content and change methods to achieve lifelike visuals.

In tsukat, XR and AI development company, high-quality and realistic visuals are inseparable from our solutions. Our engineers’ expertise and knack for detail empower us to deliver tangible results — industry-specific training simulations, groundbreaking R&D solutions, or innovative design experiences. Learn more about our services on our web page.