10 AI Tools for 3D Artists: Animation, Lip-Sync, 3D Model Generation

As Artificial Intelligence becomes more widespread in image-dependent industries, it’s making its way into other sectors such as gaming, architecture, and virtual reality—bringing changes to 3D production. While AI’s role in image generation is well-known, its potential goes much further, solving daily challenges for 3D artists and studios.

AI tools are streamlining operations and enhancing creativity by simplifying asset management, improving character animations, and automating lip-sync. As a studio working extensively with 3D models, we constantly seek tools to optimize our processes. That’s why we’ve compiled a list of 10 promising AI-driven tools that are set to boost productivity in 3D creation. We hope these insights will benefit fellow 3D artists and colleagues in the industry and help them pick only necessary from myriads of AI tools.

1. Dash AI

Dash AI is a general-purpose tool for artists featuring an impressive asset library management system. It creates a well-tagged asset database by analyzing various aspects of assets, including models, textures, technical specifications, images, and connected assets. This plugin streamlines the search process, allowing artists to quickly add assets to their scenes – particularly useful for quick drafts and client presentations. In addition to tagging, Dash AI offers another plugin that generates content from existing tools and scene compilations, adding further value to the workflow.

2. Cascadeur

Cascadeur, a newly enhanced AI animation tool. It focuses on pose physics adjustment and prediction for animations. Using video references, the system analyzes movements and adds secondary motion, resulting in natural-looking draft animations. It also corrects character poses automatically, eliminating the need for manual inverse kinematics (IK) adjustments for every joint. Instead, artists only need to adjust one joint (like arms or legs), and the rest of the pose follows naturally. As a supportive AI animation tool in our daily pipelines with XR projects, Cascadeur can be a game-changer, especially when we need a quick and natural-looking animation.

3. ML Deformer

It is worth mentioning that most plugins presented here are working for Unreal Engine. For better or worse, Unity isn’t that much affected by AI. So, take this information into account.

ML Deformer, an Unreal Engine tool, leverages neural networks to improve character deformation. Traditional 3D animation often struggles to accurately depict the human body’s natural movement, especially something like arm spin or limb joints. To fix this issue, animators usually add extra bones, morph, and blend shapes to their characters. This step requires manual adjustments and heavy simulation calculations for optimal results. ML Deformer offers a more efficient solution. Though setting it up involves manual tasks like pose correction and geometry caching, the machine-learning process looks at the original mesh and its skeletal structure to create deformation maps. These maps enable real-time, natural-looking deformations, even for complex actions.

4. ML Cloth Deformer

ML Cloth Deformer is a specialized version of ML Deformer designed for cloth deformation for 3D animations. It uses trained data for cloth folds and simulates cloth folds using the same logic as muscle deformers. It generates a corrective map of blend shapes that allows the cloth to move naturally on a character. For those familiar with real-time physics, achieving this level of detail is often time-consuming and has a significant performance impact. However, with this deformer, you get dynamically simulated cloth, making the process much faster and more efficient. All you need is to prepare your assets, ensure their quality, and set up the ML Cloth Deformer to do its thing.

5. Motorica AI

Another AI animation tool is Motorica AI. It works well in Unreal Engine and can be used with other 3D programs like Blender and Maya, and it is available as a standalone app. Motorica offers a set of predefined character styles like zombie, soldier, nurse, and more. When you choose a character, the plugin can generate a short animation of it or animate the movement based on a predefined behavior preset. It’s simple and fast, making it ideal for short promos or drafts. The user selects a few key points, and the app handles the rest. One of the key benefits is that the app analyzes and predicts movement. For example, if you want the character to cover a long distance quickly, it will make the character run.

Additionally, characters can rotate and perform secondary motions. Best of all, the app is currently free to use. As for downsides, the characters’ set is somewhat limited and can’t be used for other 3D animations. For instance, if you don’t have a zombie in your virtual training, you probably won’t need the app. Another minus is that there are no settings to adjust the final result, so ensure it fits the requirement before incorporating it for your needs.

6. Replica AI

In our previous project, we developed an AI persona named Zeus, combining a 3D avatar, Unity as a runtime, and AI-powered speech-to-text (STT) and text-to-speech (TTS) capabilities. While we successfully achieved seamless lip-sync manually, we were eager to explore AI-driven solutions for optimization.

That is how we discovered Replica AI, an Unreal Engine plugin designed to quickly and easily integrate lip-sync animations for AI avatars. Ideal for non-player characters (NPCs) in games or immersive experiences, Replica AI automatically connects to an AI chatbot and utilizes TTS and STT to convert speech and animate the 3D avatar’s lips accordingly. This AI animation tool allows users to input a text and receive a vocal answer from a metahuman or avatar. Yet, as with any app, it has its drawbacks, such as non-concordance of lip movement to voice and requiring manual adjustments. So, if you’re considering integrating it into your daily tasks, pay attention to this moment.

7. Reverie

This tool is highly anticipated by 3D artists working in Unreal Engine, but it’s still under development. As creators claim, Reverie has color-grading scenes with AI in its artistic arsenal. The user selects the desired image for the app and adds it to its work. AI automatically matches the scene’s color and “mood” to an artist’s creation. While this feature is intriguing, it’s primarily useful for creating initial drafts. Because despite the speed of tuning, a qualified creator knows how to set up a scene with all the lights, colors, and general ambiance.

Another feature of the tool is 3D world generation based on a single image. The user uploads a wanted picture, such as the woods, and the plugin creates a world in Unreal based on the characteristics of these woods, which adds grass, lighting, and additional objects.

8. Ludius AI

Another tool in Unreal Engine is an AI development assistant plugin, which functions as an AI chatbot. This plugin allows users to request blueprints for crafting in Unreal Engine using text prompts. It can be a helpful tool for experienced developers, saving them much 3D scene development time. However, similar to other code generators, it has its drawbacks. For instance, it may not be suitable for inexperienced developers, as using AI-generated code may result in issues requiring troubleshooting expertise. In such cases, users may spend more time explaining their requirements to the chatbot rather than reaping the benefits of its assistance. This is because conveying complex concepts in simple language can be challenging.

We look forward to a future where neural networks can understand user requests precisely from the outset. Until then, user support remains crucial.

9. Nvidia’s Omniverse

Nvidia’s Omniverse platform offers several AI-enabled features for 3D creation and adjustment. One standout solution is Audio2Face, a powerful tool that takes an audio track and automatically syncs it with a metahuman’s facial movements, making lip-sync animation appear natural. One of its key advantages is ease of use—there’s no need to export data from Nvidia’s toolkit, as the results can be streamed directly into Unreal Engine to animate a metahuman. The tool also allows users to fine-tune emotions and intonation within Omniverse Machinima, giving creators control over their characters’ facial expressions and tone.

Audio2Gesture is another AI-driven feature that generates full-body or upper-body motion based solely on audio input, such as human speech (not music). The neural network powering Audio2Gesture interprets the speech to create body movements, while facial animation is handled by the separate Audio2Face tool. This function makes Audio2Gesture particularly useful in dialogue-heavy scenes, offering an efficient way to generate natural body motions with the help of Omniverse’s AI animation tool.

Nvidia’s AI Room Generator is an extension that leverages Generative AI (primarily ChatGPT) to help users create 3D environments. By defining an area in the scene and providing a text prompt, users can quickly generate a room layout designed by AI. This tool is enhanced by DeepSearch, which allows users to search for assets stored in the Nucleus server using natural language queries. DeepSearch can even be trained on the user’s own data, enabling it to automatically generate and place 3D objects within a scene—significantly reducing the time required to build complex environments.

Another impressive innovation in Nvidia’s AI toolkit is real-time hair simulation. Traditionally, simulating hair and fur is a resource-intensive task due to the complexities of physics, gravity, wind, and interaction. Nvidia uses neural physics to predict how hair would behave in the real world, providing lifelike hair with realistic thickness and movement. This breakthrough makes real-time hair simulation much more feasible, even in demanding animations or game scenes.

Finally, Nvidia’s ray reconstruction technology improves upon traditional path tracing, a method used to create highly realistic images by simulating light behavior in a scene. While capable of producing stunning results, path tracing is computationally intensive and typically used in offline rendering. Nvidia’s ray reconstruction system optimizes the number of rays, employs AI-driven denoising, and delivers high-quality images with reduced performance overhead. This makes it ideal for games, PC-oriented applications, and rendering within Unreal Engine.

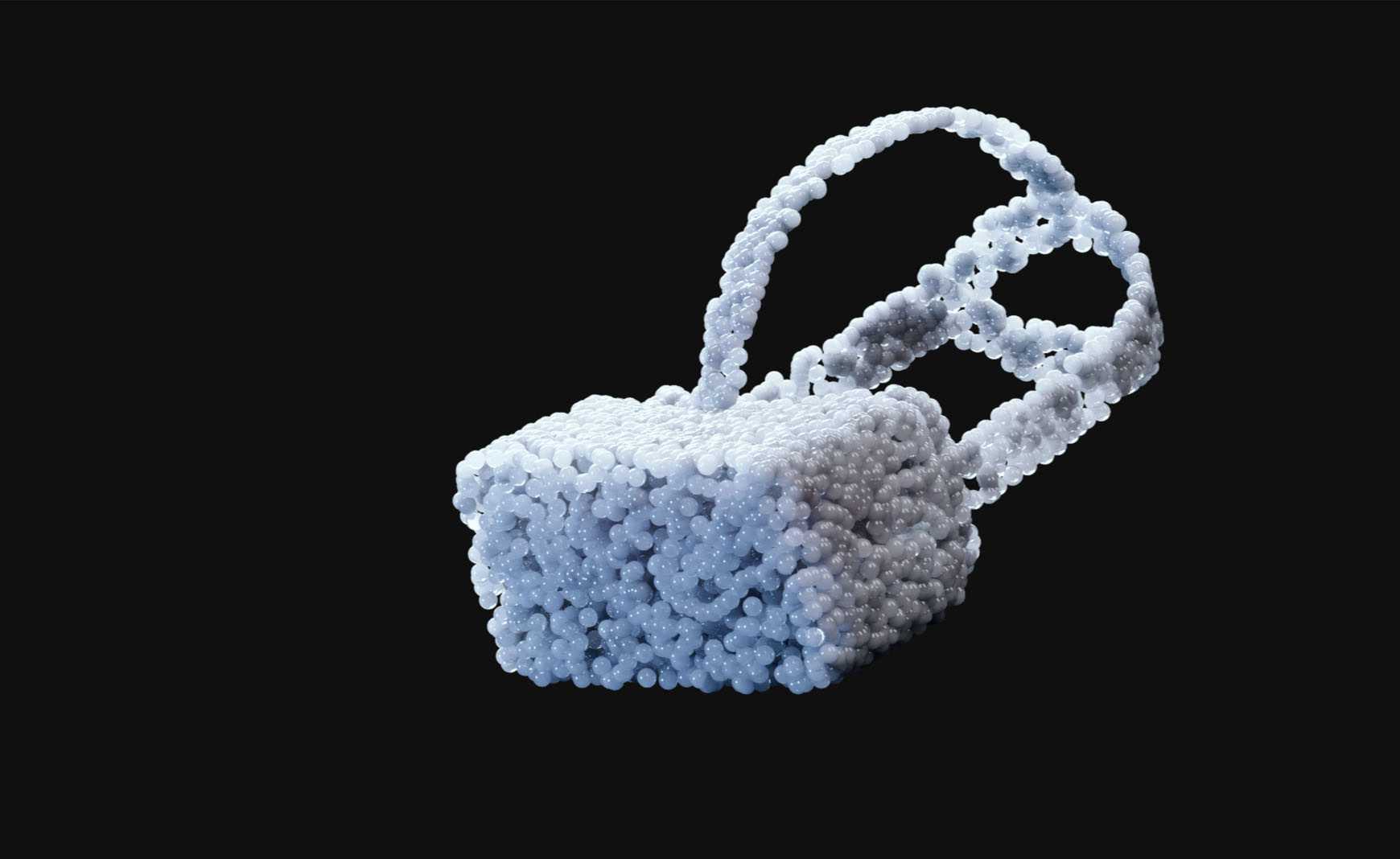

10. Bernini

Bernini is an innovative development of Autodesk that offers AI-powered 3D model generation based on text prompts. While still under development, Bernini has the potential to accelerate the creation process and provide artists with a quick way to generate basic 3D models and optimization tools available in 3D Max. However, like similar technologies, it may face challenges with geometry accuracy and texture quality, making it more suitable for blocking and visualization rather than final outputs. We can presumably meet it in the future as a part of 3D Max’s toolkit.

Sum Up

As AI continues to evolve, it’s becoming increasingly integrated into the 3D production pipeline. The tools highlighted in this article offer a glimpse into the potential of AI to optimize workflows, enhance creativity, and boost realism. While these tools are valuable assets for creators, it’s essential to remember that AI is an instrument, not a replacement for human creativity and expertise.

If you’ve had the opportunity to explore any of these plugins or others, please share your experiences. We’re always seeking ways to boost our work pipelines, and fellow colleagues are invaluable sources of knowledge and inspiration.

Happy creating!